Agenda-setting intelligence, analysis and advice for the global fashion community.

Agenda-setting intelligence, analysis and advice for the global fashion community.

Virtual try-on has long promised a way to let online shoppers gauge how an item will fit before they buy it, but for all the ways companies have tried to make it work, it hasn’t caught on widely.

Google thinks it has a solution in generative AI.

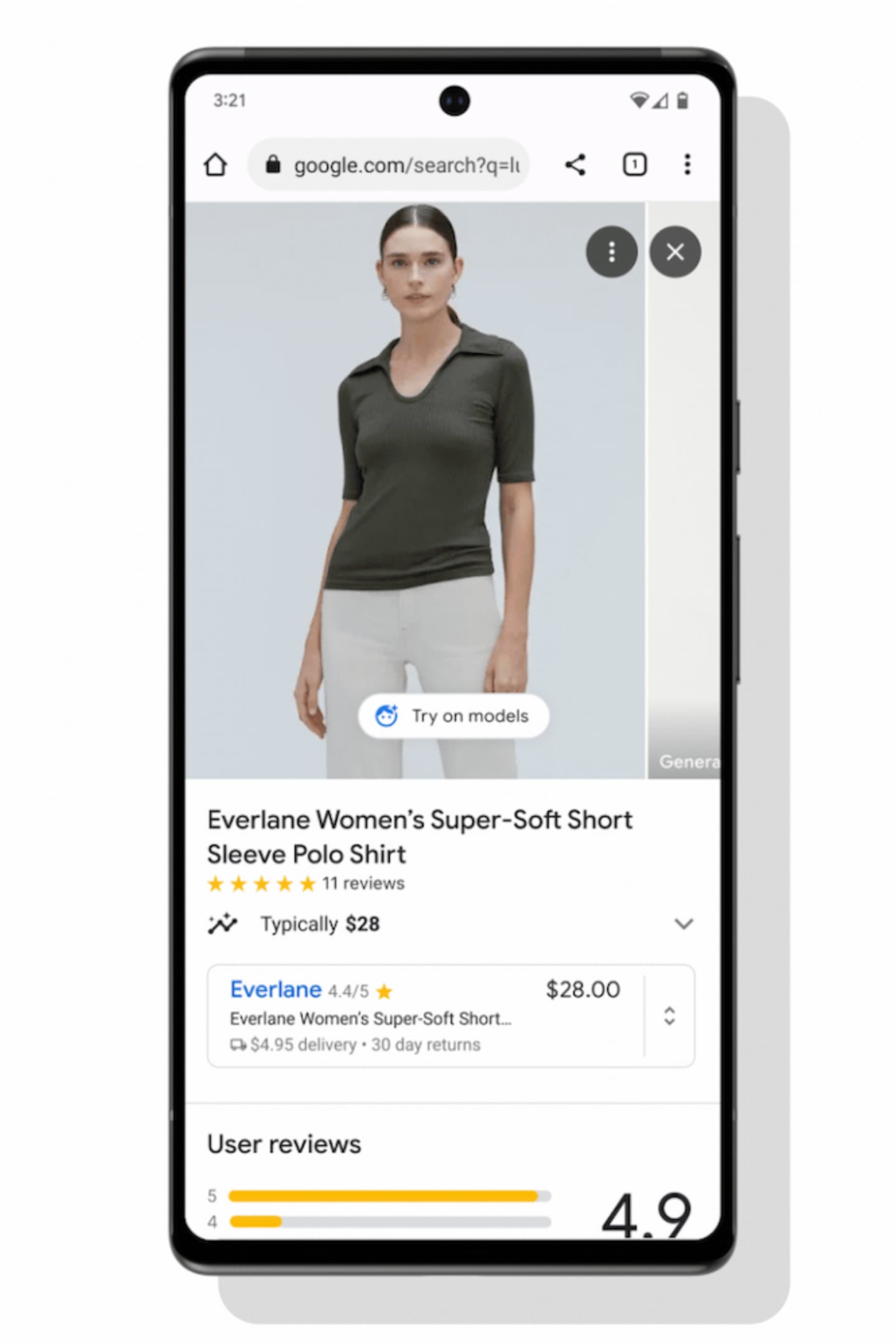

The market leader in online search unveiled a new feature Wednesday it says allows it to show how any garment will “drape, fold, cling, stretch and form wrinkles and shadows” on models from sizes XXS to 4XL. On search results where the option is available, users will see a “try on” label allowing them to choose a real person they want to see the item on, with the available models spanning a range of skin tones, body shapes and ethnicities.

Google is introducing the feature for US users on women’s tops from hundreds of brands, including H&M, Anthropologie, Everlane and Loft. It will expand it to more products, including men’s tops, later this year and eventually plans to offer it internationally.

ADVERTISEMENT

With the announcement, Google becomes the biggest contender yet to try its hand at virtual try-on. For years start-ups have looked to technologies like augmented reality and artificial intelligence to solve online shopping’s inherent stumbling block: It’s hard to know how an item will fit. Typically they’ve overlaid digital versions of garments on images shoppers provide of themselves. So far, companies and consumers haven’t widely adopted these tools, though the efforts keep gaining momentum as technology advances and companies like Walmart and Farfetch enter the field.

But even if Google isn’t showing shoppers a representation of a product on themselves, it believes its approach produces the best result — and could scale fast.

“We want to make sure that it’s natural [looking], and that I don’t think the industry has reached yet,” said Shyam Sunder, group product manager for commerce at Google. “We believe and hope that this is a massive step forward in virtual try-on.”

With other methods, a garment can look like it’s hovering over the body, or the user might have to stand in a rigid position, Sunder noted. Generative AI allows Google to create what Sunder believes is a far more realistic image, with the blending of wearer and garment happening at the pixel level.

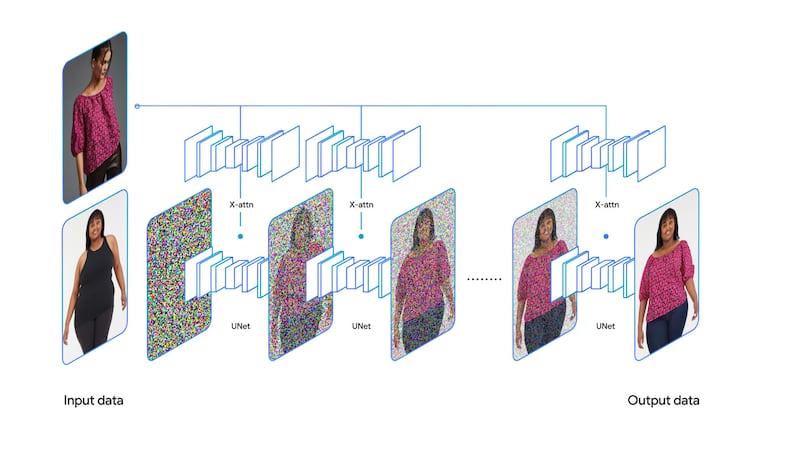

To do it, Google uses an AI technique called diffusion, which underlies its own image-generating system, Imagen, and others like OpenAI’s DALL-E. It gradually adds pixels of “noise” to an image until it’s fully degraded and then reverses the procedure to reconstruct it, using this “denoising” process to teach the model how to produce new images from random data.

AI image generators can pair diffusion with language models to generate images from written prompts. But instead of text inputs, Google’s AI uses two images, one of a person and one of a garment, each of which it sends to a neural network — effectively a set of algorithms. The algorithms share information with one another and are able to generate a picture of the person wearing the garment.

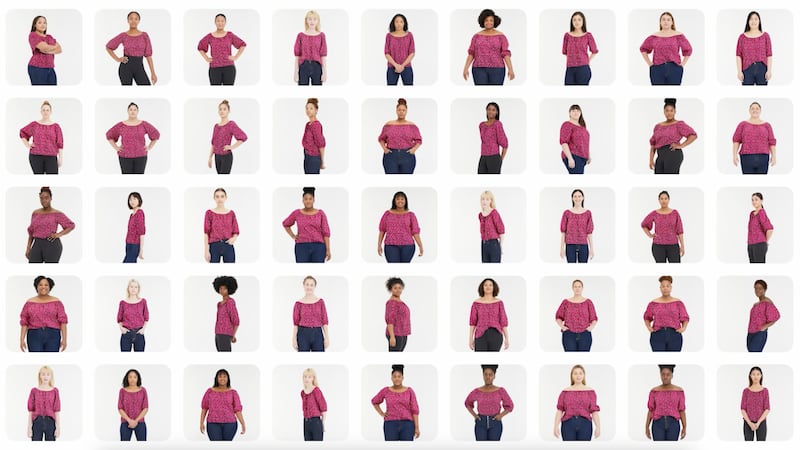

Google still had to photograph the 40 women to serve as its models. (It also photographed 40 men for when it launches the feature for men’s items.) But Sunder said all Google needs from brands to create imagery showing its models wearing one of their garments is a standard shot of the item on their own model. They can use a photograph like those they’re already creating for e-commerce and sending to Google for shopping listings, which would mean there are no added steps or costs for brands.

So does Google believe it could one day offer the feature on any item it has a product listing for?

ADVERTISEMENT

“That’s the hope,” Sunder said.

How shoppers will respond to Google’s try-on feature remains to be determined. But last year, when McKinsey surveyed consumers about different virtual technologies they were interested in, try-on for clothing and footwear ranked highest, suggesting shoppers still want a way to solve the issue of not being able to gauge fit while shopping online.

Consumers wouldn’t be the only ones to benefit if someone can crack virtual try-on. McKinsey noted the technology “could help ease the cost and complexity associated with record-breaking numbers of product returns.” Returns have become extremely costly for brands and can be a heavy burden on smaller companies with limited resources. Retailers of all sizes have been warming to the idea of charging for returns to compensate.

“We’re pretty confident that virtual try-on will help reduce returns,” said Maria Renz, Google’s vice president and general manager of commerce.

The issue was one that came up as Google consulted with brands about its new try-on feature. Retailers have come to depend on Google as a place to get their products in front of online shoppers, and Renz said the company works closely with them to try to understand — and hopefully solve — their pain points.

Technology is key to that effort, and generative AI is opening up new opportunities. Recently the company announced it was testing a new search experience that integrates generative AI and that its shopping vertical will be a key testing ground.

Sunder said they’ve had the virtual try-on feature in development for three years. But methods they tried in the past didn’t yield a good enough result. It’s only with the emergence of new diffusion models, which Google — itself a leader in AI research — has built on, that the company was able to create a product it felt was ready to launch.

“We see e-commerce — commerce in general — with new technology becoming more immersive, more innovative,” Renz said.

The search giant is debuting an experimental new search experience and will use its shopping vertical as a key testing ground.

Long touted as a solution for e-commerce’s low conversion rates and ballooning returns, AR and AI-powered fit-tech is gaining momentum, but is still facing the same old challenges.

Two decades after launching its shopping vertical, and many false starts along the way, the search engine giant has become a force in how people shop for clothes. Here’s how brands can make the most of it.

Marc Bain is Technology Correspondent at The Business of Fashion. He is based in New York and drives BoF’s coverage of technology and innovation, from start-ups to Big Tech.

Digital product passport technology could tackle counterfeiting, help brands meet regulatory requirements and unlock new sources of revenue and engagement. Michele Casucci, founder and GM of Certilogo and Robin Mellery-Pratt, BoF’s head of content strategy, gathered executives in Paris to discuss its potential.

The search giant is rebuilding its shopping experience around AI, rolling out new features like personalised product feeds and AI-powered shopping guides, beginning this week.

To boost sales and create a better customer experience, companies are revamping the e-commerce search bar to make it easier for shoppers to find products or even shop in new ways.

Concerns are growing that the technology’s transformative power has been oversold. Kamali, on the other hand, is as convinced as ever that, for her at least, it marks the start of a new creative era.